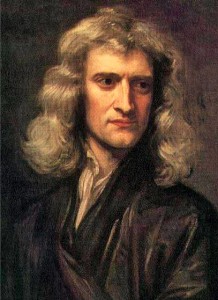

Sir Isaac Newton, the single best candidate for the first true scientist, was a devout Christian. It is fascinating to wonder how Sir Isaac would have responded to the demystification of much of what he bereaved as a result of the application of science to the understanding of nature.

Scientific Spirit

How romantics and technophiles can reconcile our love-hate relationship with scientific progress

by Joseph Grosso

Published in the March/April 2011 Humanist

ShareThis

In March 2009 headlines blared across the front pages of New York’s Daily News that were at once stimulating, scary, and altogether predictable. Dr. Jeff Steinberg, a doctor at the Fertility Institute, with offices in Los Angeles and New York, announced that within six months he would enable would-be parents to choose various traits for their babies, such as hair, eye, and even skin color. The science behind the procedure is a technique called preimplantation genetic diagnosis (PGD), which takes a cell from embryos produced by a couple in vitro and screens them for possible inherited genetic diseases. An embryo that passes the screening is then transferred into the mother’s uterus for the subsequent pregnancy. Even though human traits are controlled by multiple genetic factors, PGD can also potentially be used to screen for cosmetically desired traits to a certain level of probability.

The news brought forth the expected chorus of critics from religious groups to bioethicists, as well as from doctors who questioned whether Steinberg had the ability to fulfill his lofty ambition. (Incidentally, the procedure has already been banned in several countries including Germany, Ireland, and Switzerland.) In the face of the storm, Steinberg, who along with other fertility doctors already offers parents the opportunity to select their child’s sex, withdrew his designer baby offer while also declaring in reference to his adversaries: “Genetic health is the wave of the future. It’s already happening and it’s not going to go away. It’s going to expand. So if they got major problems with it, they need to sit down and really examine their own consciences because there’s nothing that’s going to stop it.”

Only several days later President Obama announced his reversal of George W. Bush’s stem cell policy. While Bush allowed federal funding for stem cell lines only in use before an overall ban took effect (most of which were deemed useless by scientists) Obama pledged to “vigorously support” new research that may eventually yield fantastic results in the understanding and treatment of diseases and spinal injuries. The decision could possibly lead to a Congressional overturn of the Dickey-Wicker amendment that bans the use of taxpayer money to create embryos for research. Much like Steinberg’s desire to use PGD, Obama’s decision also drew a wide chorus of criticism whose main thrust echoes the abortion debate regarding the extent of human rights that should be afforded to an embryo or fetus. The question will gain even greater relevance in the future debate on creating embryos for the sole purpose of using their cells.

While much of the media storm blows toward the genetic revolution and related technology that may someday redefine what it is to be human, there are other revolutions stirring in the realm of science. No lesser figure than Bill Gates predicts that the next revolutionary industry will be the field of robotics. Robots, which already play a large role in industries such as automobile manufacturing, may soon be part of our day-to-day lives in various forms. Writing in the January 2007 issue of Scientific American, Gates somewhat downplays the anthropomorphic features most robots will have in favor of detailing what they’ll actually do for us.

It seems quite likely, however, that robots will perform an important role in providing physical assistance and even companionship for the elderly. Robotic devices will probably help people with disabilities get around and extend the strength and endurance of soldiers, construction workers and medical professionals… They will enable health care workers to diagnose and treat patients who may be thousands of miles away, and they will be a central feature of security systems and search-and rescue missions.

From designer babies to robots, scientific scenarios such as these have long been a part of the human psyche in ways both exciting and horrifying. Countless books and films have speculated on the dark side of science, namely science gone extremely wrong by going “too far” in reshaping the world and thereby oppressing or even exterminating those who first pioneered it. The machines take over, new races and categories of creatures colonize their creators; technology becomes an instrument of super oppression. Aldous Huxley’s Brave New World sets the literary standard here. And Isaac Asimov’s I Robot series may well prove prophetic. There’s also Jurassic Park, The Matrix, Maximum Overdrive, and the Terminator movies. Michel Houellebecq’s books The Elementary Particles and The Possibility of an Island are more recent examples of genetics gone wild.

The love-hate relationship between Homo sapiens and science goes right into the shaky foundations of the meaning of life. The relationship is certainly paradoxical. On one hand the expansion of scientific knowledge always seems to bring about further recognition of humanity’s rather small place in the grand scheme of things. Of course it is now well beyond dispute that the earth isn’t the center of the solar system, that the Milky Way galaxy is just one of hundreds of billions in the known universe, and there’s a distinct possibility that the universe we find ourselves in is far from the only one. On top of it all, we know that in 5 billion years or so our sun will begin to die by running out of hydrogen and then helium in its core, causing it to expand out to pulverize at least Mercury and Venus and perhaps Earth—and if our planet isn’t completely destroyed by this certainly the oceans and rivers will boil away and any remaining life will quickly fry. In the interim we’ll have to contend with the thousands of near-Earth objects (NEOs), such as asteroids and comets that can potentially slam into Earth at a given moment. One such collision already created our moon out of a chuck of the Earth and another likely wiped out the dinosaurs 65 million years ago. Others may have had at least a part in the four other major extinctions in Earth’s history. And it’s not only in the cosmic sense that science has the ability to make us feel insignificant. For all our apparent dominance of the earth there are more than 100 billion bacteria living and working in a single centimeter of our intestine, and that number rivals the total amount of humans that have ever existed. Science also tells us that our emotions are chemical transfers in the brain and our most basic mission is simply to pass along our genes.

On the other hand, while making traditional metaphysics like religion more difficult, science empowers humanity to drastically increase control of its environment, in other words to “play God.” And in the process, humanity has the potential to destroy, or at least greatly alter, itself. Airplanes, for instance, have enabled the world to come together in cultural exchange and trade in a far closer way than most people throughout history could ever imagine. Yet planes have also enabled destruction in the form of bombed out cities from Dresden to Hanoi and as weapons themselves on September 11, 2001. It was clear during the 2003 SARS epidemic just how fast pathogens can find their way around the globe via air travel.

In his book Parallel Worlds physicist Michio Kaku analyses Earth in terms of the Kardashev scale, a ranking system for civilizations based on their energy output (proposed by Russian astronomer Nikolai Kardashev in 1964). A Type I civilization has harnessed the entire amount of solar energy to strike their planet. It may be able to modify the weather or build cities on the ocean. A Type II civilization has exhausted the power of a single planet and has harnessed the power of an entire star. Those living in a Type II might have colonized some nearby planets and may even have the ability to travel at the speed of light. Type III civilizations would have the ability to harness the power of billions of stars and therefore have galaxy-wide responsibilities.

At the moment, Kaku puts our own civilization at 0.7, which, while with striking distance of a Type I, is a thousand times smaller in terms of energy production, mainly because most of our energy comes from the remains of dead planet in the form of coal and oil. In other words, scientifically you can say we’re still in our infancy. And yet there are plenty of ideas floating around in the world of theory to give even semi-technophobes sleepless nights. By reading only popular science literature one would come across ideas for:

- Large-scale domestication of the planet’s ocean. With most of the ocean’s fisheries in severe decline, domestication serves as a possible method for stabilizing populations, saving the world wide fishing industry and, of course, the food supply. However success in this venture will reduce evolutionary marvels like the Blue Fin tuna and other such species to the status of sheep or cows (sheep were first domesticated 10,000 years ago, cows around 8,000), a scenario that many biologists and other wildlife enthusiasts shudder to contemplate.

- Militarization of outer space. Thus far this is an effort largely orchestrated by the U.S. Space Command in a quest for what military people call “full-spectrum dominance,” though Israel and the EU have more recently joined the fray. According to various publications by the U.S. Space Command, outer space promises to be the fourth dimension of warfare, the battle ground of the future. While such possibilities as space-based lasers entice military planners they also risk starting another dangerous arms race (in violation of the Outer Space Treaty signed in 1967 that reserves space for peaceful uses), causing devastating accidents, and costing billions of dollars.

There are also the zanier possibilities of time travel through wormholes, colonization of Mars, and all kinds of stuff involving artificial intelligence. The world is undergoing numerous Cartesian revolutions happening all at once. The question is whether certain compartments of Pandora’s Box should even be opened. If the answer is to be an emphatic yes, the questions then become: What will the world look like? Will we even recognize it? And, most critically, how will we feel about it?

In the Beginning: A Historical Context

The traditional version of the birth of modern science centers around the figures of Francis Bacon and Rene Descartes; Bacon for popularizing observation and experimentation, particularly in Novam Organum published in 1620, and Descartes for his mechanical worldview and skepticism, most famously expressed in Discourse on the Method (1637) as “Je pense, donc je suis”—“I think therefore I am.” Though like all official stories this one is open for revision, or at least a lot of expansion.

In A People’s History of Science, Clifford Conner reminds us that science is mainly a collective enterprise and that the drivers of technological progress were the artisans, miners, and craftsmen who did the dirty work, i.e. real time experiments, that advanced the fields of metallurgy, medicine, and navigation. However, even a more traditional reading forces us to acknowledge the continuality of discovery. A good argument could be made that the revolution began in 1543 when, shortly before his death, Nicolas Copernicus published Revolutions of the Heavenly Spheres, and Flemish anatomist Andreas Vesalius published The Structure of the Human Body, the first book on anatomy based on the author’s dissections. Of course neither Vesalius nor Copernicus were born with such knowledge but had to learn much from Renaissance thought and techniques. For the Renaissance to occur scholars were needed to translate the works of Arabic scholars who preserved and expanded the scientific and mathematical works of the Greeks, who learned much from the Babylonians, who built on the Sumerians, and so on.

Still there was something going on in the seventeenth century that deserves the banner “revolutionary.” After all it was a trailblazing century that included Galileo’s confirmation of Copernicus’ heliocentric solar system, Johannes Kepler putting together the laws of planetary motion, William Harvey’s revelation about the circulation of blood, the discovery of calculus by both Isaac Newton and Gottfried Leibniz independently of each other, John Woodward’s publication of An Essay Toward a Natural History of Earth (1695) which spearheaded what would become modern geology, Robert Boyle bringing Sir Francis Bacon’s methods to fruition, and, top it all off, Newton’s discovery of universal gravitation and laws of motion. By the end of the century a model for the universe was in place that with modifications still exists, as well as the foundations of modern mathematics and most of the branches of modern science.

To say the least, many of the new intellectual kids on the block weren’t readily accepted by the worldly powers. The seventeenth century began with Giordano Bruno—an Italian philosopher and proponent of heliocentrism and an infinite universe—being burnt at the stake for heresy and blasphemy in 1600. Rene Descartes fled France for the Netherlands; Benedict Spinoza did the same from Spain. Galileo spent the last nine years of his life under house arrest, all in the context of a violent century of war and witch burning. However, despite the clerical opposition (which wasn’t at all universal) the Aristotelian model that held sway for so long was eventually overthrown.

Actually it wasn’t long before the new science received patronage from states that recognized its practical value. The Accademia del Cimento came into being in Florence in 1657 funded by the Medici family, the Parisian Royal Academy of Sciences was founded in 1666 by the government of Louis XIV, and the Royal Society in London a few years before in 1660. While the latter was not a direct organ of the state it wasn’t difficult to see which way the wind was blowing, especially in the aftermath of the English Revolution. Anyone with a rank of baron or over was automatically eligible to be a Fellow. Historian Christopher Hill explains: “The Society wanted science henceforth to be apolitical—which then as now meant conservative.”

Instead, attacks on the new science were soon launched by other intellectuals, the echoes of which are still heard today. One of William Blake’s most famous paintings featured Newton doing geometrical measurements while sitting naked on a rock, signifying Blake’s opposition to a singular focus on mechanical laws in interpreting nature. Goethe, who considered himself a scientist above anything else, had similar issues with Newtonian physics. His longest work turned out to be his Theory of Colors, written to refute Newton’s own optical theory. The Romantics wrote longingly about a union with a non-mechanical, mysterious nature; the Gothic movement took off with The Castle of Otranto in 1761; Mary Shelley’s Frankenstein was published in 1831 and Bram Stroker’s Dracula in 1897; the Great Awakening spread throughout the American colonies; and the Luddites smashed machines in Northern England, unknowingly coining the term by which all future technophobes would be branded. Thomas Pynchon, in an essay published in 1984 titled, “Is it O.K. to be a Luddite?” wrote that all of the above “each in its way expressed the same profound unwillingness to give up elements of faith, however ‘irrational,’ to an emerging technological order that might or might not know what it was doing.”

Instead, attacks on the new science were soon launched by other intellectuals, the echoes of which are still heard today. One of William Blake’s most famous paintings featured Newton doing geometrical measurements while sitting naked on a rock, signifying Blake’s opposition to a singular focus on mechanical laws in interpreting nature. Goethe, who considered himself a scientist above anything else, had similar issues with Newtonian physics. His longest work turned out to be his Theory of Colors, written to refute Newton’s own optical theory. The Romantics wrote longingly about a union with a non-mechanical, mysterious nature; the Gothic movement took off with The Castle of Otranto in 1761; Mary Shelley’s Frankenstein was published in 1831 and Bram Stroker’s Dracula in 1897; the Great Awakening spread throughout the American colonies; and the Luddites smashed machines in Northern England, unknowingly coining the term by which all future technophobes would be branded. Thomas Pynchon, in an essay published in 1984 titled, “Is it O.K. to be a Luddite?” wrote that all of the above “each in its way expressed the same profound unwillingness to give up elements of faith, however ‘irrational,’ to an emerging technological order that might or might not know what it was doing.”

We can call it the Romantic Revolution or, looking at it in a different light, the Romantic counter-revolution. Edward O. Wilson, in his 1998 book, Consilience: the Unity of Knowledge, puts it like this:

For those who for so long thus feared science as Faustian rather than Promethean, the Enlightenment program posed a grave threat to spiritual freedom, even to life itself. What is the answer to such a threat? Revolt! Return to natural man, reassert the primacy of individual imagination and confidence in immortality…promote a Romantic Revolution.

Of course it’s practically impossible that this was ever, or could ever be, a zero sum game. Even the most dedicated Romantic or hardcore technophobe couldn’t live without modern science and the technology it spawns. It’s unlikely they would care to for a moment. Instead an uneasy, inevitable, co-existence was begun that continued right through the twentieth-century development of quantum physics, chaos theory, and genetics as science has become more and more specialized, and more mathematically inaccessible to lay people.

C. P. Snow and Consilience: A Cultural Advance

It’s been more than fifty years since British scientist and novelist C. P. Snow caused a stir with his famous Rede lecture of 1959 titled, “The Two Cultures.” In it, Snow lamented:

I believe intellectual life of the whole western society is increasingly being split into two polar groups… Literary intellectuals [“natural Luddites” in Snow’s words] at one pole—at the other scientists, and as the most representative, the physical scientists. Between the two a gulf of mutual incomprehension—sometimes (particularly among the young) hostility and dislike, but worst of all lack of understanding.

It’s questionable as to what extent Snow’s argument was or could be true. On the more extreme end it appears in the postmodern notion that modern (or more crudely “Western”) science is just one of many equally relevant sources of knowledge and/or interpretation of reality, dominated historically by white men and employed in the oppression of non-European people all over the world. (In a historical sense, all for the most part true with eugenics, social Darwinism, chemical weapons used against anti-colonial uprisings, and so forth.) Such a relativistic critique has been popular in some university philosophic circles, and does feed into the fear of science as a destructive, spiritless force. This was the sort of misunderstanding that was on Snow’s mind.

It’s questionable as to what extent Snow’s argument was or could be true. On the more extreme end it appears in the postmodern notion that modern (or more crudely “Western”) science is just one of many equally relevant sources of knowledge and/or interpretation of reality, dominated historically by white men and employed in the oppression of non-European people all over the world. (In a historical sense, all for the most part true with eugenics, social Darwinism, chemical weapons used against anti-colonial uprisings, and so forth.) Such a relativistic critique has been popular in some university philosophic circles, and does feed into the fear of science as a destructive, spiritless force. This was the sort of misunderstanding that was on Snow’s mind.

Snow’s theme was relevant enough to be revisited in a debate a few years ago between two of the greatest American scientists, the aforementioned E.O. Wilson and the late Stephen Jay Gould. In Consilience, Wilson’s method for bridging any gap between science and the humanities is a unity of both under the same actual laws of science. Citing the scholarly example of the Enlightenment and using the method of reductionism (the breaking down of complexity into its smaller parts as a way to an ultimately greater understanding of the whole), Wilson is confident that everything is, in a sense, connected, mainly by the mental process.

While somewhat dismissive of other forms of cognition, Wilson takes the sketchy view that the same basic laws cut across all disciplines. Since different fields overlap when it comes to questions of policy, he feels that a unified knowledge offers the potentially best way forward. Regarding the arts, Wilson writes that an understanding of art’s biological origins and cultural universalisms are the path to eventual unification with the natural sciences. Throughout the book Wilson is careful to stress that this unification will not mean the end of the humanities (“Scientists are not conquistadors out to melt Inca gold”).

In such statements Wilson seems to sense the reception he will from some critics, and indeed a fair number didn’t go for such reasoning, seeing Wilson’s model as a takeover of the humanities by natural science. More importantly, Wilson’s model drew a response from Gould in his final book before an untimely death, 2003’s The Hedgehog, the Fox, and the Magister’s Pox: Mending the Gap between Science and the Humanities. In it Gould argued that science and the humanities work in different ways and cannot be morphed into a “simple coherence” but can and should be “ineluctably yoked” toward the common goal of human wisdom. Gould agreed with Wilson that the attempted linkage between the sciences and humanities is the “greatest enterprise of the mind.” However he took issue with Wilson’s model of reductionism. Put simply, Gould believed that not everything can be understood by being broken down to its smaller parts. Though he acknowledged reductionism’s successes, Gould challenged its ultimate supremacy with the concepts of emergence and contingency, the latter referring to unique, unexpected events that happen if given enough time, such as the asteroid killing the dinosaurs. Emergence deals with nonlinear interactions. For example, Gould described a nonlinear interaction between two species, A and B. His scenario starts with the experimentally tested fact that species A always wins over species B under a definite set of circumstances. However, when a species C is added to the mix, species A only wins half the time with species B winning just as often. Species D and E are added, and so forth. The point is we discover that the relative frequency of victory for species A and B depends upon hundreds of different varying environmental factors. Both contingency and emergence would limit the effectiveness of reductionism, therefore seeming to undermine Wilson’s model.

When it relates to the humanities, Gould claimed that given the Renaissance’s emphasis on the recovery and understanding of classical knowledge, an initial clash with scientists looking to expand on knowledge through direct observation was inevitable; however to his mind that time had long past. Yet just as Gould argued that reductionism alone would not suffice in the natural sciences, so the concerns of the humanities cannot be resolved by the methods of science. Instead science and the humanities should complement and learn from each other.

When it relates to the humanities, Gould claimed that given the Renaissance’s emphasis on the recovery and understanding of classical knowledge, an initial clash with scientists looking to expand on knowledge through direct observation was inevitable; however to his mind that time had long past. Yet just as Gould argued that reductionism alone would not suffice in the natural sciences, so the concerns of the humanities cannot be resolved by the methods of science. Instead science and the humanities should complement and learn from each other.

It will be some time before we know if Wilson’s ambitious model of consilience actually works, or if Gould’s mutual respect and reinforcement model proves to be more practical. However, the importance of the consilience concept should not be underestimated. Some kind of improved relationship between the sciences and humanities can only help, particularly in this day and age when both are confronted by militant religious fundamentalism (and worse, religious fundamentalism that rejects science while seeking to employ its deadliest products, nuclear weapons). It is this divide between science and the humanities on one side and religious fundamentalism on the other that is now the most polarizing, and so it becomes necessary for the fields of science and the humanities to become natural allies.

A New Age: We Are the World

In 2008 the Geological Society of America joined an already established chorus of other scientists in announcing that the world had entered a new period of geological history, moving beyond the Holocene epoch considered to have begun after the last Ice Age some 12,000 years ago and characterized by the generally stable weather that allowed human civilization to flourish. Citing such issues as the build-up of greenhouse gases, the transformation of land surfaces, the species extinction rate in tropical forests, and the acidification of the oceans, the Society’s committee declared we are now living in the Anthropocene epoch and in this period human civilization essentially creates, or at least dominantly influences, its own environment. There is debate among scientists who endorse the Anthropocene epoch about when precisely it began. A logical date may be 1784 due to the invention of the steam engine or maybe a few years later with the explosion of the industrial revolution. William Ruddiman argued in Plows, Plagues, and Petroleum: How Humans took Control of Climate that the Anthropocene period actually began with the Neolithic Revolution 12,000 years ago when the birth of agriculture and animal domestication largely replaced hunter-gatherer societies and increased the amount of methane and CO2 into the atmosphere, interrupting the regular glacial cycle.

Whatever the case, the idea that the planet is now, or will be in the near future, a biogeochemical entity of our own making only increases the importance of science and the responsibility of the species that practices it. It also inevitably means the questions we tackle and the technology we spawn will get more contentious. Perhaps any fear of science stems from an overwhelming sense of its inevitability. The cliché “you can’t stop science” is banal and overused, but it aptly expresses the hopes and fears embedded in its practice. At the same time it speaks to the importance of an educated public to confront, channel, and utilize science as it advances.

Scientists often declare the need to bring their work to the public through popular formats, festivals, and so on. While this is critical it also cuts both ways. An educated, democratic public is necessary not only to learn from scientists, but also to keep scientists and the governments they often work for answerable. So in this way some kind of consilience movement is especially relevant, beyond just the intellectual satisfaction a uniting of science and humanities would bring. It may eventually prove to be the best way to ensure the worst potentials of science are held off, and out of the hands of religious fundamentalists and other reactionaries, making science the progressive force it should only be. Creating a brave new world appears to be our destiny whether we like it or not. With a consilience movement, a dedicated citizenry, and a spot of luck—perhaps that image will take on a whole new and better meaning.