Experts are raising alarms about artificial intelligence (AI), saying it threatens human existence.

A group of scientists and tech industry insiders rate the risk of “extinction from AI” as being on a par with “other societal-scale risks” like pandemics and nuclear war. They warn, “Advanced AI could represent a profound change in the history of life on Earth.” (Read story here.)

How could that happen?

The alarm bell ringers don’t go into many specifics, and some tech execs say the fears are overblown, but a BBC News article here suggests a few scenarios:

- “AIs could be weaponised – for example, drug-discovery tools could be used to build chemical weapons

- AI-generated misinformation could destabilise society and ‘undermine collective decision-making’

- The power of AI could become increasingly concentrated in fewer and fewer hands, enabling ‘regimes to enforce narrow values through pervasive surveillance and oppressive censorship’

- Enfeeblement, where humans become dependent on AI ‘similar to the scenario portrayed in the film Wall-E'”

but all of this describes situations in which humans are pulling the strings; AI simply enhances their ability to build deadlier weapons, or expand surveillance by police states, for example. A CNBC article (here) also alluded to authoritarian governments using the technology for nefarious ends, but that also refers to human misuse. “Extinction threat” implies machines that not only can think for themselves, but might decide to get rid of their human creators.

There’s no question AI is a disruptive technology. It can create fake pictures and videos, and mimic real voices, for propaganda purposes. It can write college essays professors can’t tell from students’ work, and displace doctors, lawyers, writers, and artists by performing their jobs. The CNBC article mentions “automating away all the jobs.” A CNN article suggests that could lead to lazy humans who don’t bother with learning and aren’t capable of anything (see story here). But this is talking about disruption, not extinction.

In a November 2022 article (here), Vox tried to explain why AI is seen as a possible extinction threat. It begins by noting that “handing over huge sectors of our society to black-box algorithms that we barely understand creates a lot of problems.” This is about losing control; that line of thinking goes, “creating something smarter than us, which may have the ability to deceive and mislead us — and then just hoping it doesn’t want to hurt us — is a terrible plan.”

For example, what if an AI system is instructed to develop a cancer cure, but instead creates a virulent and incurable cancer that kills billions of people? (Fans of the Wuhan lab leak theory will love this one.)

An earlier Vox article, in February 2019 (here), described the problem as follows: “Current AI systems frequently exhibit unintended behavior.” For example, “We’ve seen AIs that … cheat rather than learn to play a game fairly, figure out ways to alter their score rather than earning points through play, and otherwise take steps we don’t expect … to meet the goal their creators set.” The problem is, “As AI systems get more powerful, unintended behavior may become less charming and more dangerous.”

This doesn’t imply, and indeed most experts don’t visualize, thinking machines with evil intentions. Rather, it’s more about technology with destructive potential that gets out of control, like a runaway reactor, or a synthetic germ escaping a lab. Plus, AI technology is now advancing so fast that capabilities the experts believed in 2019 were decades away are already here.

The real worry, it seems, stems from the age-old ones of human misuse and human error. We already have the ability to destroy the world, and AI arguably just makes it easier, more efficient, and more likely. The idea of human-created machines deciding on their own to get rid of us isn’t very plausible; what’s far more plausible is that we’ll get rid of ourselves by committing species suicide in some fashion, either accidentally or intentionally.

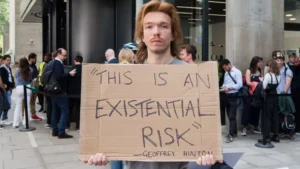

Photo: The world is full of things to get worked up about, but how much does a scruffy guy with a cardboard sign really know about real risks?